A UK-based software company providing sector-focused SaaS solutions across industries such as healthcare, legal, education, and government has been using SQList for many years to export data from SharePoint to SQL Server for third-party companies.

Their clients often require SharePoint data to be exported when migrating to another SharePoint site with a different structure or to an entirely different system. This typically happens during mergers or acquisitions, where IT systems need to be integrated or consolidated.

Until recently, the client was able to use SQList out of the box, as the number of sites, lists, and document libraries was manageable. However, their latest project presented a highly unusual scenario – one that even we at AxioWorks initially believed SQList might not be able to handle.

The task? Exporting data from approximately 140,000 SharePoint sites. This very large organisation had created a separate SharePoint site for each project across its entire structure, leading to an immense number of sites. Although each site was relatively small in terms of lists, libraries, and data volume, the sheer scale was unprecedented.

SQList wasn’t designed to handle this volume, especially via its user interface for configuring replications. Setting up replications manually for this many sites was simply not feasible. To complicate matters further, each site was actually a site collection, which meant the built-in site scanning feature in SQList could not be used.

After evaluating several options, the client still chose SQList – working with our team here at AxioWorks to come up with a creative, technical workaround.

By default, SQList stores its replication configurations in its own SQL CE (Compact Edition) database, but it can be configured to use a SQL Server database instead. This flexibility allowed the client to manage replication configurations using SQL scripts rather than relying on the UI.

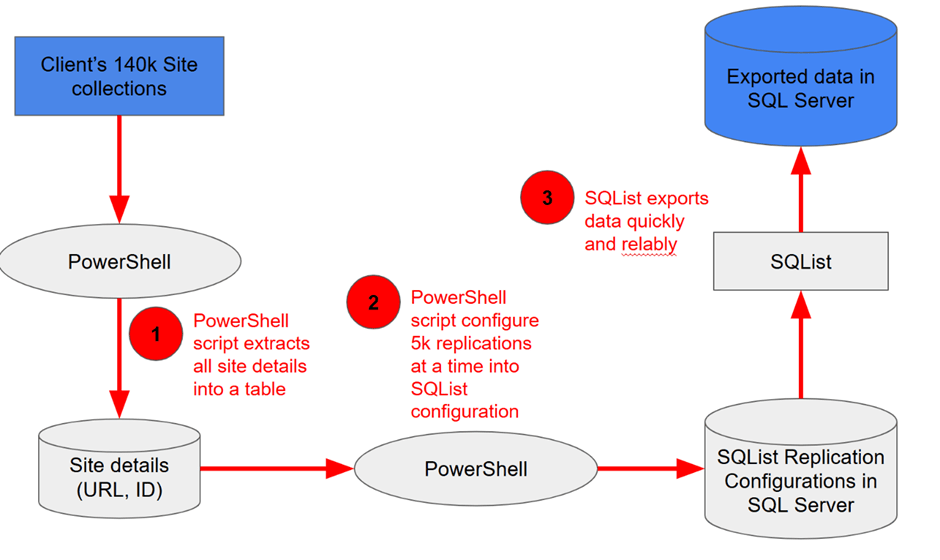

Using a PowerShell script, the client first exported all site details – specifically the URLs and unique IDs – into a separate SQL table.

With this in place, they configured roughly 5,000 replications at a time by injecting them directly into the SQL Server database. SQList then ran the data extraction for each batch. Once a batch was completed, they scripted the next one and repeated the process. They also utilised SQList’s built-in multi-instance feature to distribute the replications, thereby maximising parallelisation and performance.

While the solution was far from straightforward, it proved highly effective. Once SQList took charge of the export, it automatically handled complex issues such as throttling, item limits, and column mapping, ensuring the extracted data was accurate and complete.

In the end, what began as a daunting challenge became a testament to the flexibility and power of SQList in managing enterprise-scale data migrations.

Step-by-Step: Large-Scale Data Migration with SQList

- Extract Site Information

We used a PowerShell script to gather the URLs and unique IDs of approximately 140,000 SharePoint site collections and stored them in a SQL table. - Batch Configuration of Replications

Another script configured batches of around 5,000 replication settings at a time, written directly into the SQL Server database used by SQList. - Run Data Export with SQList

SQList used these scripted configurations to perform data exports, handling one batch at a time and repeating the process across multiple instances to maximise efficiency.

Best Practices for Large-Scale SharePoint Data Migration

Successfully migrating data from SharePoint at scale – especially across tens or even hundreds of thousands of site collections – requires more than just the right tool; it demands a strategic approach.

Based on real-world experience from one of the largest SharePoint migrations we’ve seen, here are key best practices to help you streamline your own data migration journey and avoid common pitfalls:

- Automate Configuration Management: Use SQL Server to store replication settings and manage them via scripts for efficiency.

- Batch Processing: Migrate in chunks (e.g. 5,000 sites at a time) to ensure manageability and control.

- Parallel Processing: Use SQList’s multi-instance capabilities to speed up the process and handle load.

- Use Scripting Tools: Leverage PowerShell for automating site detail extraction and replication setup.

- Pre-Emptive Problem Handling: Select a tool like SQList that handles throttling, item limits, and schema mapping automatically.

- Test Before You Scale: Pilot smaller migrations to uncover potential issues early.

- Be Flexible: Customise your approach and adapt tools as necessary for large-scale needs.

- Document Everything: Keep thorough records for troubleshooting and future migrations.

***

Discover the power of SQList for yourself – just like our client who migrated data from 140,000 SharePoint sites. Whether you’re handling a few sites or facing enterprise-scale complexity, SQList is built to adapt.

Try SQList free for 30 days and experience the speed, flexibility, and reliability first-hand.

Or, get a personalised demo and see how it can transform your data analysis and integration processes.